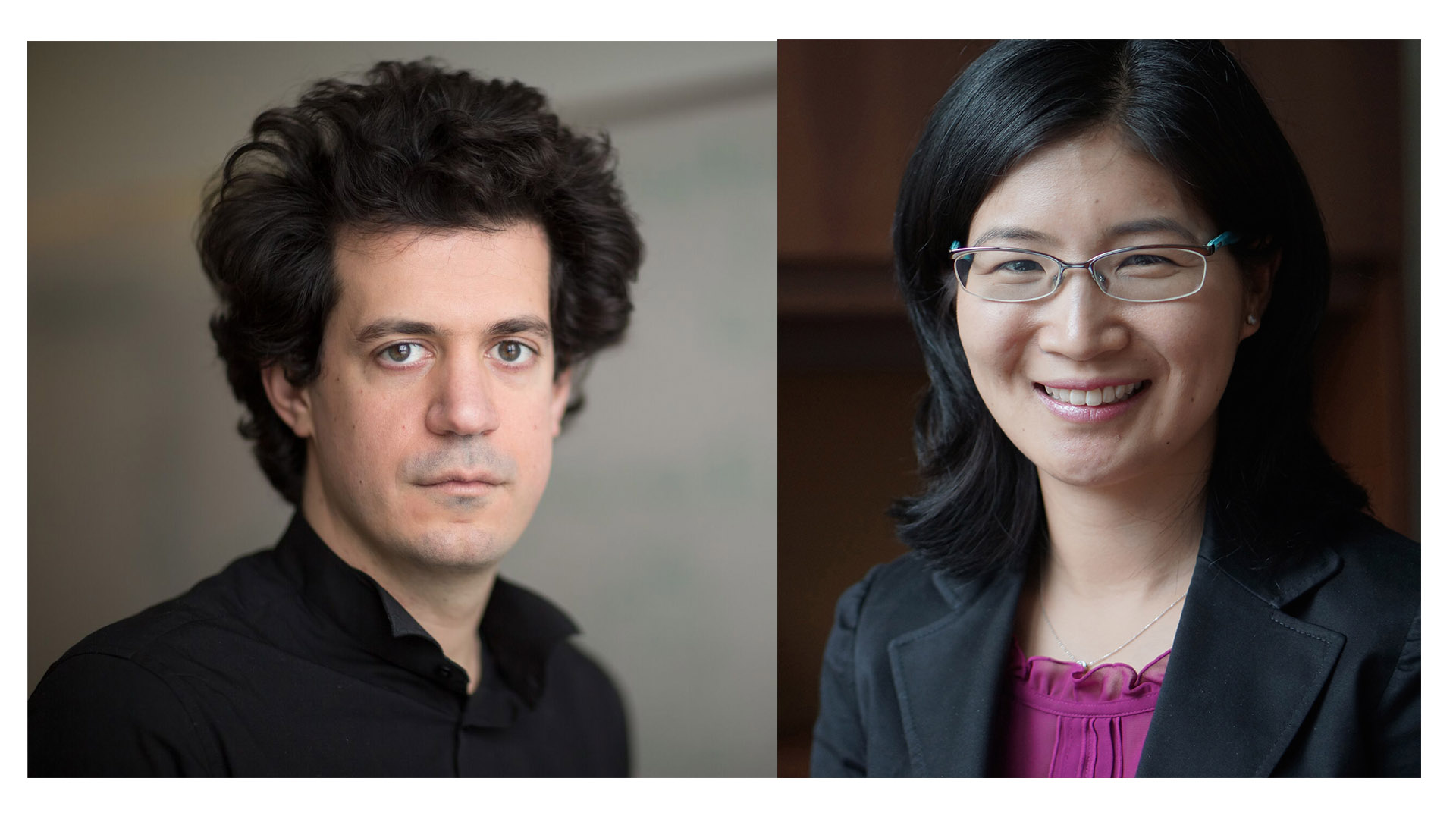

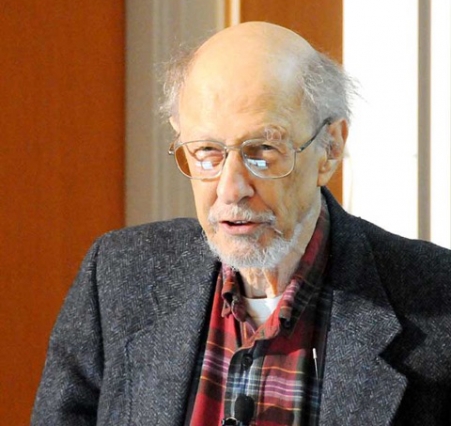

EECS sophomore Mussie Demisse demonstrates his team's "Smart Suit" for workouts. Photo: Gretchen Ertl

Stephanie Schorow | EECS Contributor

It doesn’t get any better than this — at least not at MIT. There’s the roar of raucous laughter as students play games or test products that they themselves have designed and built. There’s the chatter of questions asked and answered, all to the effect of “How did you do that?” and “Here’s what I did.”

To top it off, there’s the welcoming smell of pizza, slices being pulled from rapidly cooling boxes by a group of students and teaching assistants from the four sections of 6.08 (Introduction to EECS via Interconnected Embedded Systems). They have gathered for a special occasion during the last week of spring term: to show off their class final projects.

“This is the best class I have taken here,” says EECS sophomore Mussie Demisse, dressed in a hoodie with a square contraception on his back that could have fallen off Iron Man. He and his team have designed a “Smart Suit” that analyzes and assesses a user’s pushup form.

“The class has given me the opportunity to do research on my own,” Demisse says. “It’s introduced us to many things and it now falls on us to pursue the things we like.”

Flickr slideshow. All photos by Gretchen Ertl for EECS

The course introduces students to working with multiple platforms, servers, databases, and microcontrollers. For the final project, four-person teams design, program, build, and demonstrate their own cloud-connected, handheld, or wearable Internet of Things systems. The result: about 85 projects ranging from a Frisbee that analyzes velocity and acceleration to a “better” GPS system for tracking the location of the MIT shuttle.

“Don’t hit the red apple! Noooo,” yells first-year student Bradley Albright as Joe Steinmeyer, EECS lecturer and 6.08 instructor, hits the wrong target while playing “Vegetable Assassins.” The object of the game is to slice the vegetables scrolling by on a computer screen, but Steinmeyer, using an internet-connected foam sword, has managed to hit an apple instead.

Albright had the idea for a “Fruit Ninja”-style game during his first days at MIT, when he envisioned the visceral experience of slicing the air with a katana, or Japanese sword, and hitting a virtual target. Then, he and his team of Johnny Bui and Eesam Hourani, both sophomores in EECS, and Tingyu Li, a junior in management, were able to, as they put it, “take on the true villains of the food pyramid: vegetables.” They built a server-client model in which data from the sword is sent to a browser via a server connection. The server facilitates communication between all components through multiple WebSocket connections.

“It took a lot of work. Coming down to the last night, we had some problems that we had to spend a whole night finishing but I think we are all incredibly happy with the work we put into it,” Albright says.

Steinmeyer teaches 6.08 with two EECS colleagues: Max Shulaker, Emmanuel E. Landsman (1958) Career Development Assistant Professor, and Stefanie Mueller, X-Window Consortium Career Development Assistant Professor. The course was co-created by Steinmeyer and Joel Voldman, an EECS professor and associate department head.

Mueller, for one, is impressed with the students’ collaborative efforts as they developed their projects in just four weeks: “They really had to pull together to work,” she says.

Even projects that don’t quite work as expected are learning experiences, Steinmeyer notes. “I’m a big fan of having people do work early on and then go and do it again later. That’s how I learned the best. I always had to learn a dumb way first.”

Demisse and his team — Amadou Bah and Stephanie Yoon, both sophomores in EECS, and Sneha Ramachandran, a junior in EECS — confronted a few setbacks in developing their Smart Suit. “We wanted something to force ourselves to play around with electronics and hardware,” he explains. “During our brainstorming session, we thought of things that would monitor your heart rate.”

Initially, they considered something that runners might use to track their form. “But running’s pretty hard. [We thought,] ‘Let’s take a step back,” Demisse recalls. “It was a natural evolution from that to pushups.”

They designed a zip-up hoodie with inertial measurement unit (IMU) sensors on an elbow, the upper back, and the lower back to measure the acceleration of each body part as the user does pushups for 10 seconds. That data is then analyzed and compared to the measurements of what is considered the “ideal” pushup form.

A particular challenge: getting the data from various sources analyzed in reasonable amount of time. The system uses a multiplex approach, but just “listens” to one input at a time. “That makes it easier to record data at a faster rate,” Demisse says.

Another team developed a fishing game in which users cast a handheld pole and pick up “fish” viewed on a nearby screen. First-year Rafael Olivera-Cintron demonstrates by casting; a soft noise accompanies the movement. “Do you hear that ambient sound? That’s lake sounds, the sounds of water and mosquitos,” he says. He casts again and waits. And waits. “Yes, it’s a lot like fishing. A lot of waiting,” he says. “That’s my favorite part.” His teammates included EECS juniors Mohamadou Bella Bah and Chad Wood and EECS sophomores Julian Espada and Veronica Muriga.

Several teams’ projects involve music. Diana Voronin, Julia Moseyko, and Terryn Brunelle, all first-year students, are happy to show off “DJam,” an interconnected spin on Guitar Hero. Rather than pushing buttons that correspond to imaginary guitar chords, users spin a turntable to different positions — all to the beat of a song playing in the background.

“We just knew we wanted to do something with music because it would be fun,” Moseyko says. “We also wanted to work with something that turned. From a technical point of view, it was interesting to use that kind of sensor.”

Music from the Middle Ages inspired the team of Shahir Rahman and Patrick Kao, both sophomores in EECS, and Adam Potter and Lilia Luong, both first-years. Using a plywood version of a medieval instrument called a hurdy-gurdy, they created “Hurdy-Gurdy Hero,” which uses a built-in microphone to capture and save favorite songs to a database that processes the audio into a playable game.

“The idea is to give joy, to be able to play an actual instrument but not necessarily just for those who [already] know to play,” Rahman says. He cranks the machine and slightly squeaky but oddly harmonic notes emerge. Other students are clearly impressed by what they’re hearing. Olivera-Cintron sums up in just three words: “That is awesome.”

Date Posted:

Research Theme:

Card Title Color:

Card Description:

Photo:

Card Wide Image: