Bolometers, devices that monitor electromagnetic radiation through heating of an absorbing material, are used by astronomers and homeowners alike. But most such devices have limited bandwidth and must be operated at ultralow temperatures. Now, researchers say they’ve found a ultrafast yet highly sensitive alternative that can work at room temperature — and may be much less expensive.

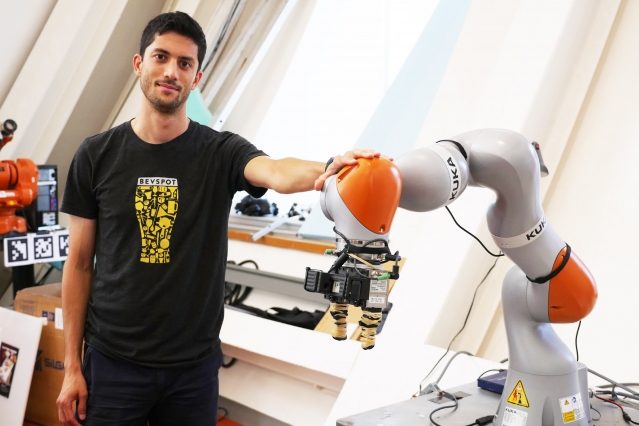

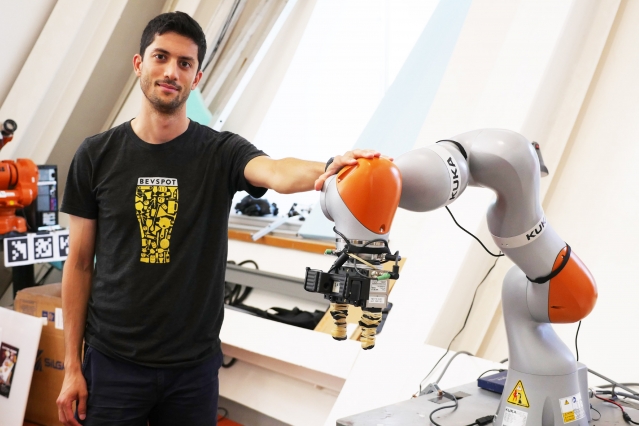

The findings, published today in the journal Nature Nanotechnology, could help pave the way toward new kinds of astronomical observatories for long-wavelength emissions, new heat sensors for buildings, and even new kinds of quantum sensing and information processing devices, the multidisciplinary research team says. The group includes EECS Professor and Research Laboratory of Electronics member Dirk Englund, recent MIT postdoc Dmitri Efetov,, Kin Chung Fong of Raytheon BBN Technologies, and colleagues from MIT and Columbia University.

“We believe that our work opens the door to new types of efficient bolometers based on low-dimensional materials,” says Englund, the paper’s senior author. He says the new system, based on the heating of electrons in a small piece of a two-dimensional form of carbon called graphene, for the first time combines both high sensitivity and high bandwidth — orders of magnitude greater than that of conventional bolometers — in a single device.

“The new device is very sensitive, and at the same time ultrafast,” having the potential to take readings in just picoseconds (trillionths of a second), says Efetov, now a professor at ICFO, the Institute of Photonic Sciences in Barcelona, Spain, who is the paper’s lead author. “This combination of properties is unique,” he says.

The new system also can operate at any temperature, he says, unlike current devices that have to be cooled to extremely low temperatures. Although most actual applications of the device would still be done under these ultracold conditions, for some applications, such as thermal sensors for building efficiency, the ability to operate without specialized cooling systems could be a real plus. “This is the first device of this kind that has no limit on temperature,” Efetov says.

The new bolometer they built, and demonstrated under laboratory conditions, can measure the total energy carried by the photons of incoming electromagnetic radiation, whether that radiation is in the form of visible light, radio waves, microwaves, or other parts of the spectrum. That radiation may be coming from distant galaxies, or from the infrared waves of heat escaping from a poorly insulated house.

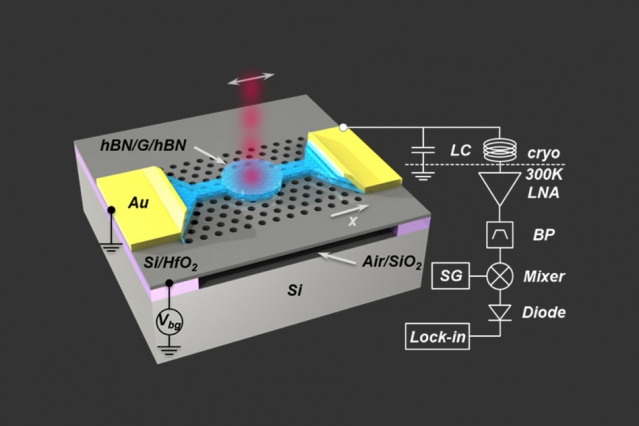

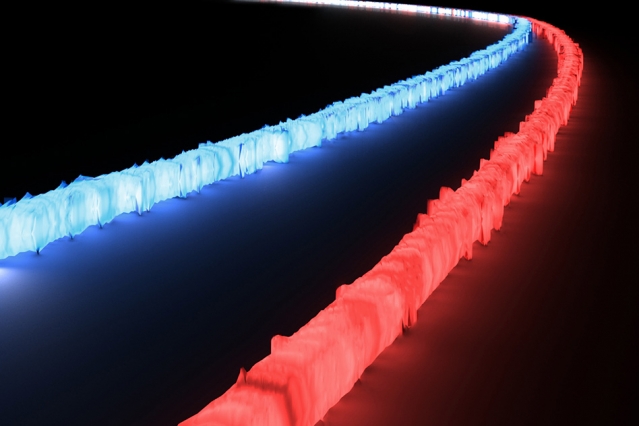

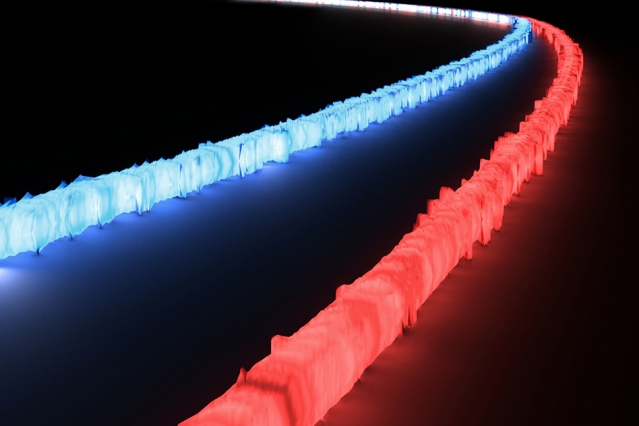

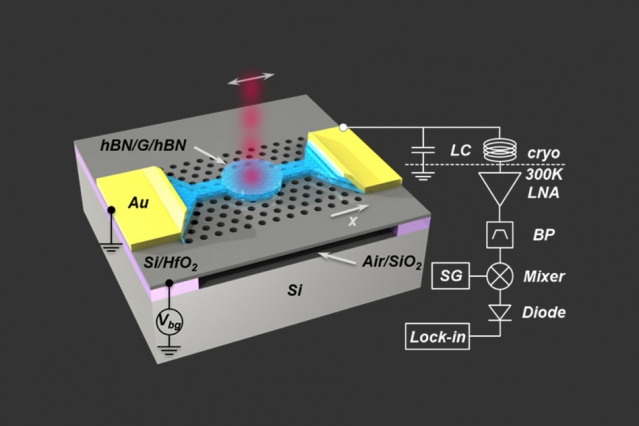

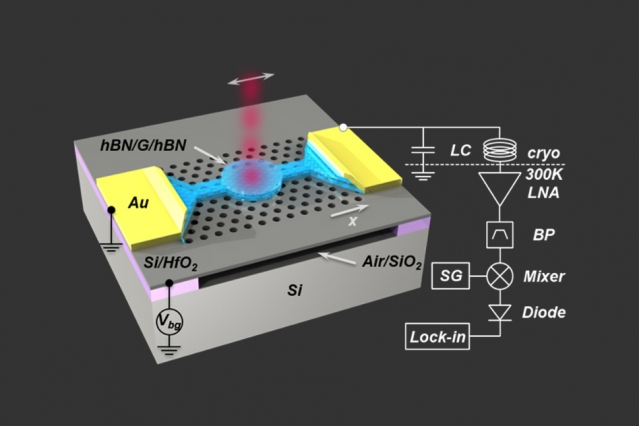

The device is entirely different from traditional bolometers, which typically use a metal to absorb the radiation and measure the resulting temperature rise. Instead, this team developed a new type of bolometer that relies on heating electrons moving in a small piece of graphene, rather than heating a solid metal. The graphene is coupled to a device called a photonic nanocavity, which serves to amplify the absorption of the radiation, Englund explains.

“Most bolometers rely on the vibrations of atoms in a piece of material, which tends to make their response slow,” he says. In this case, though, “unlike a traditional bolometer, the heated body here is simply the electron gas, which has a very low heat capacity, meaning that even a small energy input due to absorbed photons causes a large temperature swing,” making it easier to make precise measurements of that energy. Although graphene bolometers had previously been demonstrated, this work solves some of the important outstanding challenges, including efficient absorption into the graphene using a nanocavity, and the impedance-matched temperature readout.

The new technology, Englund says, “opens a new window for bolometers with entirely new functionalities that could radically improve thermal imaging, observational astronomy, quantum information, and quantum sensing, among other applications.”

For astronomical observations, the new system could help by filling in some of the remaining wavelength bands that have not yet had practical detectors to make observations, such as the “terahertz gap” of frequencies that are very difficult to pick up with existing systems. “There, our detector could be a state-of-the-art system” for observing these elusive rays, Efetov says. It could be useful for observing the very long-wavelength cosmic background radiation, he says.

Daniel Prober, a professor of applied physics at Yale University who was not involved in this research, says, “This work is a very good project to utilize the many benefits of the ultrathin metal layer, graphene, while cleverly working around the limitations that would otherwise be imposed by its conducting nature.” He adds, “The resulting detector is extremely sensitive for power detection in a challenging region of the spectrum, and is now ready for some exciting applications.”

And Robert Hadfield, a professor of photonics at the University of Glasgow, who also was not involved in this work, says, “There is huge demand for new high-sensitivity infrared detection technologies. This work by Efetov and co-workers reporting an innovative graphene bolometer integrated in a photonic crystal cavity to achieve high absorption is timely and exciting.”

For related content about this story, visit the MIT News website.

Date Posted:

Labs:

Card Title Color:

Card Description:

Photo:

Card Wide Image: