![]()

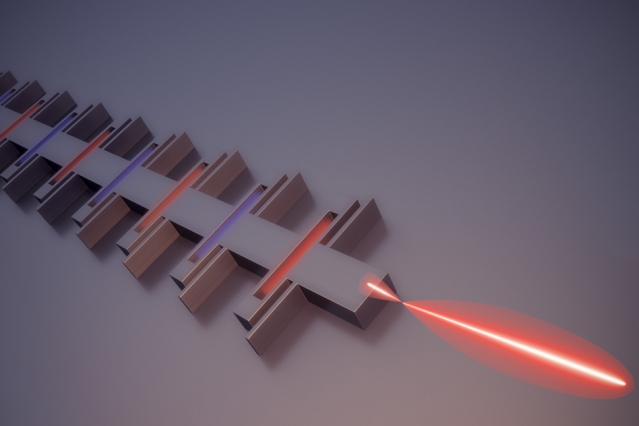

Assignments for Professor Saman Amarasinghe’s undergraduate course included consulting with mentors, interviewing users, writing promotional plans, — and, of course, leading the development of an open-source apps. Image: MIT News

Larry Hardesty | MIT News

Open-source software is free software whose underlying code, or “source code,” is also freely available. Open-source development projects often involve hundreds or even thousands of volunteer coders scattered around the globe. Some of the best known are the Linux operating system, the Firefox web browser, and the WordPress blogging platform.

This past spring, MIT professor of electrical engineering and computer science Saman Amarasinghe offered 6.S194 (Open-Source Entrepreneurship), a new undergraduate course on initiating and managing open-source development projects. The course had no exams or problem sets; instead, the assignments included consulting with mentors, interviewing users, writing a promotional plan — and, of course, leading the development of an open-source application.

The course is an example of an academic trend toward project-based curricula, which have long had vocal supporters among educational theorists but have drawn renewed attention with the advent of online learning, which turns lectures and discussions into activities that students can pursue on their own schedules.

But where many project-based undergraduate engineering classes result in designs or products that may not make it out of the classroom, the goal of the new MIT class was a public software release, complete with marketing campaign. And the students learned not only the technical skills required to complete their projects, but the managerial skills required to initiate and guide them.

The creation of the course had a number of different motivations, Amarasinghe explains. “MIT is a very structured place, and we ask so much of our students, sometimes they don’t have time to do anything interesting outside,” he says. “When you talk to students, they say, ‘We have ideas, but without credit, we don't have time to do it.’”

“The other thing that happened was that for the last three, four years, Facebook had this Facebook Open Academy that got students from multiple universities and paired them up with open-source projects,” Amarasinghe adds. “What I found was a lot of times MIT students were somewhat bored with some of those projects because it’s hard to meet MIT expectations. We have much higher expectations of what the kids can do.”

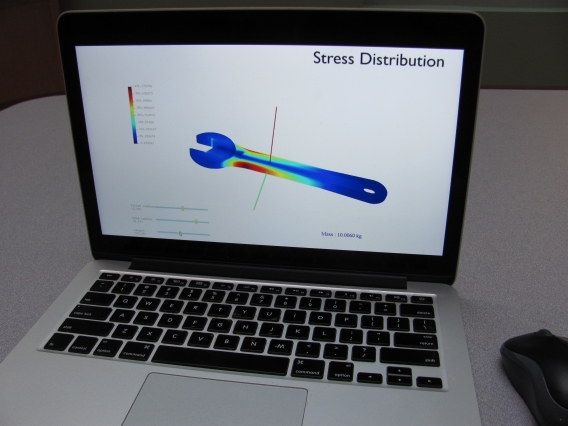

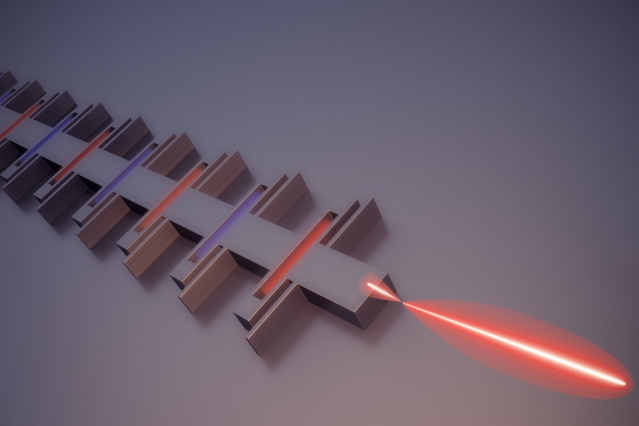

A third factor, Amarasinghe says, is that many research projects in computer science spawn software that, even though it represents hundreds of hours of work by brilliant coders, never makes it out of the lab. Open-source projects that clean that software up, fill in gaps in its functionality, and create interfaces that make it easy to use could mean that researchers working on related projects, instead of building their own systems from scratch, could modify the code of existing systems, saving a huge amount of time and energy.

Entrepreneurial expectations

Classes for Open-Source Entrepreneurship were divided between lectures and “studio” time, in which teams of students could work on their projects. Amarasinghe lectured chiefly on technical topics, and Nick Meyer, entrepreneur-in-residence at the Martin Trust Center for MIT Entrepreneurship, lectured on topics such as market research and marketing. During studio time, both Amarasinghe and Meyer were available to advise students.

Before the class launched, Amarasinghe and his teaching assistant, Jeffrey Bosboom, a graduate student in electrical engineering and computer science, had identified several MIT research projects that they thought could be the basis of useful open-source software. But students were free to propose their own projects.

After selecting their projects, the students’ first task was to meet with — or, in the case of the students who proposed their own projects, identify and then meet with — mentors, to sketch out the scope and direction of the projects. Then, for each project, the students had to identify and interview four to six potential users of the resulting software, to determine product specifications.

“When you start out with the project, you have certain preconceptions about what the problem is and what you have to do to solve that problem,” says Stephen Chou, an MIT graduate student in electrical engineering and computer science, who audited the course. “One of the first things we had to do was to look for potential users of our project, and when you talk to them, you realize that the priorities that you start out with aren’t necessarily the right ones. At the same time, some of the people we talked to were working in fields that were completely unfamiliar, at least to me. So you start learning more about their problems, and sometimes you get completely new ideas. It’s a good way to orient yourself. That was new to me, and it was very helpful.”

The third stage of the project was the establishment of a software development timeline, and at the end of the semester, as the projects drew to completion, the students’ final assignment was the development of a promotional plan.

The projects

Several of the class projects built on software prototypes that had been developed by the students themselves — or by their friends. One project, Gavel, was a system for scoring entries in contests such as science fairs or hackathons, in which teams of programmers develop software to meet specific criteria over the space of days. The initial version had been written by an MIT undergrad who was himself a frequent hackathon participant, and two of his friends agreed to use Amarasinghe’s course to turn the software into an open-source project.

Typically, hackathon judges use some sort of absolute rating scale, but this is a notoriously problematic approach: Different judges may calibrate the scales differently, and over the course of a contest, judges may recalibrate their own scales if they find that, in assigning their first few scores, they over- or underestimated the competition.

A better approach is to ask judges to perform pairwise comparisons. Comparisons are easier to aggregate across judges, and individual judgments of relative value tend not to fluctuate. Gavel is a web-based system that sequentially assigns judges pairs of contestants to evaluate, selecting the pairs on the fly to ensure that the final cumulative ranking will be statistically valid.

Another of the projects, Homer, also reflects the preoccupations of undergraduates at a technical university. Homer is based on psychological research on the frequency with which factual information must be repeated before it will reliably lodge itself in someone’s memory. It’s essentially a digital flash-card system, except that instead of picking cards entirely at random, it cycles them through at intervals selected to maximize retention.

Other projects, however, grew out of academic research at MIT. One project — dubbed Taco, for tensor algebra compiler — was based on yet-unpublished research from Amarasinghe’s group. A tensor is the higher-dimensional analogue of a matrix, which is essentially a table of data. Mathematical operations involving huge tensors are common in the Internet age: All the ratings assigned individual movies by individual Netflix subscribers, for instance, constitute a three-dimensional tensor.

If the tensors are sparse, however — if most of their entries are zero — there are computational short cuts for manipulating them. And again, in the internet age, many tensors are sparse: Most Netflix subscribers have rated only a tiny fraction of the movies in Netflix library.

Taco provides a simple, intuitive interface to let data scientists describe operations involving sparse and nonsparse tensors, and the underlying algorithms automatically generate the often very complicated computer code for executing those operations as efficiently as possible.

Other projects from the class — such as an interface for a database of neural-network models, or a collaborative annotation tool designed for use in the classroom — also grew out of MIT research. But no matter the sources of the projects, the students were the ones steering them to completion.

“They had a lot more ownership of a project than being part of a very large project that has thousands of contributors, finding a few bugs or adding a few features,” Amarasinghe says. “They got to think of the big-picture issues — how to build a community, how to attract other programmers, what sort of licensing should be used. MIT students should be the ones who are doing new open-source projects and leading some of these things.”