Meg Murphy | MIT News

Five MIT EECS students take their startups to San Francisco for a summer of innovation.

![Sandcastle students photo]()

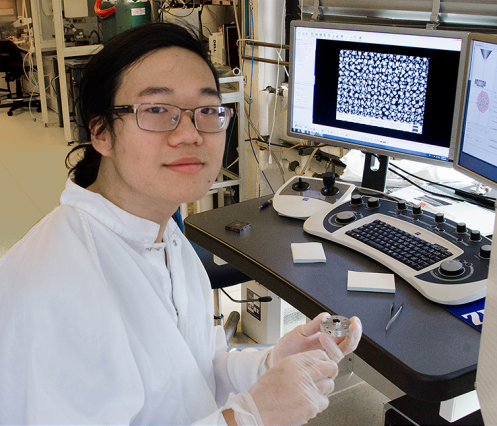

Left to right: MIT seniors Mohamed “Hassan” Kane, Guillermo Webster, and Kevin Kwok work on their startup projects at the “Sandcastle” in San Francisco. The orange cast on Kwok’s leg is the result of a trampoline accident, and has inspired plenty of teasing from his MIT housemates. Photo: Andrei Ivanov '16;

On a foggy night in San Francisco, a Bat-Signal appears in the sky. It flickers above a house on the highest hilltop in the city, where five MIT students live in what other people call a “hacker house.” It’s a label the students avoid.

Inside, the lights are on at all hours. It has been that way since the group arrived in June, set down their things, claimed spots on the furniture, and opened their laptops. They've barely looked up since, except to blow off steam, when they send up Bat-Signals, invent gadgets, or take their waterproof air mattresses for a row.

Rising MIT seniors Guillermo Webster, Anish Athalye, Kevin Kwok, Mohamed “Hassan” Kane, and rising junior Laser Nite swap news about their various startups, which they refer to collectively as “Project Sandcastle” — their preferred moniker for the residence. They are spread out on an expansive sectional couch and a wicker chair, tapping away on their laptops. All five are electrical engineering and computer science majors, and all five of them are in San Francisco to pursue startup ideas.

For each, it was a choice that came at a cost. Many of their classmates took internships at industry giants, such as Google, Facebook, and Apple. Within the group, several received lucrative offers, and the majority worked at enviable resume-building gigs last summer. The five estimate that their collective summer income would have been at least $100,000, but none of them cracked.

“We are the diehards,” says Webster. “Nobody in this room was willing to accept an internship. We were going to work on our projects no matter what.”

Working together, the students approached the Sandbox Innovation Fund and the Department of Electrical Engineering and Computer Science (EECS) for help in covering their summer living expenses. After spirited lobbying, particularly by Kane, MIT agreed to pitch in. “This is an exciting experiment in how we can support our students to pursue their passions,” says Ian A. Waitz, the dean of engineering and faculty director of the Sandbox Innovation Fund Program.

For their part, the students are thrilled they didn’t end up in their parents’ basements, or some other isolated location, trying to stay in touch via videoconference. “Most of us didn’t think MIT would ever give us money to come out here and be creative,” says Webster. He snaps his gum, flips shut his computer, and smiles. “And here we are.”

“Our first priority was to be together this summer,” Athalye chimes in. “Our next was to be in San Francisco.”

Project Sandcastle

Webster, Athalye, Kwok, Kane, and Nite each has his own workspace — a particular stretch of couch or chair where they always seem to end up. It’s dinner time and they are firing news and updates back and forth as they type. Kane came up with the idea for the weekly ritual, but in its original version, there was a two-minute timer to keep things moving. Nobody pays attention to that anymore. Often they’ll spend two hours on a single project — heatedly asking each other things like: “How are you thinking about users?” “What about security?” They sum up the gist of the conversation as: “Change this. Start over.”

The sessions are loud, fun, productive, and lead them to entertain different approaches, new thoughts, and as they say “a lot of suggestions of various utility.” They also typically involve takeout food. Ordering out has become an art form with them. They deftly cycle through deals from Sprig, UberEATS, Freshly, and so on. As Webster says, “There’s a huge amount of venture capital going into food startups in San Francisco. It’s like a free food program.”

Their projects are ambitious. Kwok and Webster have created Carbide, a new programming environment that interleaves code, charts, math, and prose to help people compose, teach, and understand coding. It’s based on the idea of “notebook programming,” where code and prose are interleaved in blocks, known as cells. In Carbide, people can inspect, visualize, and manipulate any part of the expression of the code. No need to break up your code to see what goes on or to explain it. Carbide, say Kwok and Webster, represents “a step toward the future of programming itself.”

Meanwhile, Athalye and Kane intend to transform online learning with LearnX, which will use artificial intelligence and machine-learning algorithms to organize educational material. Athalye came up with the idea during an MIT class: He was listening to a professor talk about online education and realized “people spend so much time learning how to learn stuff.” Imagine you are making a video game and want to learn how to do ray tracing. You register for an online university course in computer graphics. You discover a prerequisite is linear algebra, and so take an entire course in that too. You end up spending a lot of time learning material that is not necessary for ray tracing. But, says Athalye, “What if you could type in what you want to learn and get an exact set of instructions?”

Like the others, Nite’s creation, Websee, aims to influence society at large. He is developing an information crowdsourcing platform enabling people to combine their browsing activity anonymously to discover what’s happening across the web in real time. You install a browser extension to passively share what you see, which is combined anonymously with other users to create a more open, democratized, and unbiased aggregator of web content. Nite says it will lead to “more transparency, diversity, and public influence on our information sources online.”

World-changing ideas

San Francisco is saturated with startups focused on the trivial, or as some say, “getting your sushi faster.” Business magnate Bill Gates has observed that innovation is thriving in California but “half of the companies are silly.” He has also said that from within that noise, a handful of brilliant world-changing ideas will surface. The Project Sandcastle students are aiming for the latter, and relying on a summer of mutual support and collaboration to get it done.

“These students came to Sandbox with ideas they wanted to pursue — and a plan for how to pursue them,” says Sandbox Executive Director Jinane Abounadi SM ’90, PhD ’98. “They wanted help running an experiment on innovation itself.”

Along with providing financial resources, Sandbox keeps students connected to activities in Boston through mentorship from MIT Venture Mentoring Service, alumni, and experienced professionals. Abounadi says Sandbox is working with the students and others to evaluate the educational benefits of investments like this one. And that “regardless of what happens with their own ideas, we know they’re going to learn a lot. That’s how we think about success.”

Crashing at the node

The Sandcastle is in Miraloma, a neighborhood of curving hilltop streets and Art Deco homes. It is a fair bet that most other residents do not, like Kwok and Webster, sleep on air mattresses. Spending money on a bed never even occurred to them. “What? Why do that?” asks Kwok. If the place had not been partially furnished, they’d probably all be working on the floor.

Their neighborhood is quieter than enclaves like the Mission District, a startup haven four miles away where the young, broke, and ambitious tend to gravitate. In the minds of the Sandcastle group, they are “really far” from the action. It works for them, though. Friends in the tech community, many of them MIT students, pass through a lot. “It’s like a node,” says Laser Nite. Growing up, he lived in “a hippie town” in Iowa and attended the Maharishi School of the Age of Enlightenment, a.k.a. high school. He was artistic from an early age and realized that creativity requires interactivity.

“Just look over there,” says Kwok, taking a bite of an egg salad sandwich that Nite has prepared. “That’s Logan, our favorite piece of furniture.” Rising sophomore Logan Engstrom, also an EECS major, is working on his laptop in an adjoining room. He waves. Engstrom is an intern at a big tech company in Cupertino, and crashed at the Sandcastle the night before.

Everything is connected

San Francisco is sometimes called “the Hollywood of Technology.” The concentration of well-known tech startups within the city proper, a grid area of 7 miles by 7 miles, includes Twitter, Uber, Airbnb, Pinterest, Dropbox, Yelp, and others. Also here are big tech internships, venture capitalists, networking opportunities, and a key element for startups: a market of early adopters.

“Everything is here and everything is connected,” says Anish Athalye. He and Kane, a native of Côte d'Ivoire, are part of Greylock X, an initiative in which the well-known Bay Area venture capital firm adopts a dozen talented young people and connects them directly to this network with invitations to social events where they can meet the heavy hitters in Silicon Valley.

The pair are measured and articulate, and they often act as an organizing (and steadying) influence at Sandcastle. They began LearnX after talking about online learning at a tea party — as in, a real tea party. About 15 to 20 students gathered in an MIT dorm room, drinking tea. “Instead of drinking or dancing,” says Athalye, “we talk about ideas.”

Creative paths to MIT

There is a massive cardboard check in a corner of the Sandcastle living room. It is from the Greylock Hackfest, which was hosted at Facebook this summer. Athalye, Engstrom, Kwok, and Webster won the $10,000 grand prize for a system that turns any laptop into a touchscreen with about $1 of hardware. (The earnings, they say, “mean a lot of Instacart orders.”)

The project was an offshoot of an idea Kevin Kwok came up with in junior high school. He grew up in Virginia and sold his first app when he was 15. As a teenager, he liked to work on and blog about his “little projects.” When he was still in high school an MIT student came across his work, encouraged him to apply, and offered to write a recommendation letter. And that, says Kwok, is how a high school kid with “an okay GPA and not great SAT scores,” who was rejected by his state school, landed at MIT. “I’m glad to have finally used that junior high idea for something,” he says.

Guillermo Webster grew up in Los Angeles, the child of a painter and a musician, and attended an arts school with “no walls, homework, grades, or calculus.” He concentrated in cello and modern dance, but self-studied math and excelled in the sciences, leading to MIT.

Webster and Kwok — who has one leg in plaster after a trampoline accident — have been working on projects together since they met in an MIT Media Lab first-year program. They worked through the night on a final presentation, went back to their dorm rooms, set their alarms — and slept through them. “We’ve gone on to sleep through bigger and better things,” Webster jokes.

Two years ago, the two created “Project Naptha,” browser extension software for Google Chrome that allows users to highlight, copy, edit and translate text within images. It currently has more than 200,000 users. More recently, they created matching rings, which from a distance resemble the “brass rat,” or MIT class ring. In fact, they are 3-D-printed joke rings for the Class of 2017 that feature, as Kwok says, “a dog elegantly facing left, adjacent the moon.”

The real world of innovation

Before coming to the Sandcastle, Laser Nite slept in a closet at Thiel Manor. The well-used Atherton mansion — so nicknamed because many living there have been or are fellows in a two-year accelerator program funded by early Facebook investor Peter Thiel — is packed with “an extremely eclectic mix of people,” and is a convening place for people “who want to create things that are awesome.” Nite lists off a sampling of its projects: photonic computers, mind-mapping interfaces, augmented reality neural investigation software, virtual reality education software, self-driving cars, programmable spreadsheets, and machine learning-based physical component optimization.

There are many “hacker houses” that are imposters — predatory “startups” that rent bunk beds to unwitting souls for thousands a month. In the real ones like the Sandcastle, though, Nite says “there's an underlying energy and optimism about creating the future, and a warmth and open-mindedness toward people and new ideas.”

“We hold the somewhat quirky shared belief that we can actually do big things that change the world for the better,” says Nite. “And here we are.”

Read this article on MIT News.