Terri Park | MIT Innovation Initiative

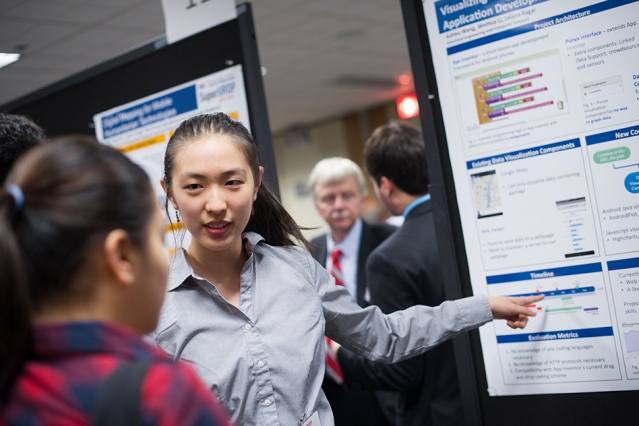

Panel highlights women innovators and entrepreneurs from a range of fields.

All photos by Rose Lincoln.

An all-star panel of women entrepreneurs shared their experiences as part of the evolving innovation ecosystem at “Empowering Innovation and Entrepreneurship”— the capstone event of StartMIT, an IAP class aimed at exposing students to the elements of entrepreneurship.

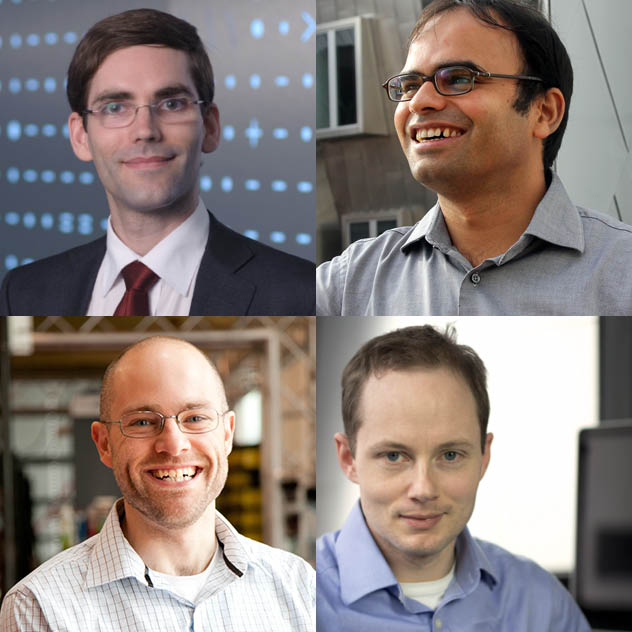

Moderated by Jesse Draper, creator and host of the Emmy-nominated “Valley Girl Show,” the Tuesday night panel included Susan Hockfield, president emerita of MIT; Helen Greiner ’89, SM ’90 CEO and founder of CyPhy Works and co-founder of iRobot; Payal Kadakia ‘05, CEO and co-founder of ClassPass; and Dina Katabi, the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science.

Draper, a former Nickelodeon star, brought her trademark approachable style to the event, encouraging panelists to jump in to the discussion with a question on how MIT has touched their careers.

“The key critical characteristic I think I found in myself and in other entrepreneurs is the ability to problem solve, and that’s the thing I learned here the most in my curriculum,” Kadakia shared. She emphasized that this skill has seen her through the development of many phases of ClassPass, a fitness membership startup that she launched in 2013. “It taught me to always take something and figure out the solution, I never got stuck.”

The advice hit home for the audience made up of alumni and students enrolled in StartMIT, a program developed by the Department of Electrical Engineering and Computer Science and supported by the Innovation Initiative, and chaired by EECS department head Anantha Chandrakasan. Over the last two weeks, the undergraduates, graduate students, and postdocs in the program have heard from founders and innovators in startups, industry, and academia about challenges they faced in their own careers.

For Greiner, it was the power of the MIT network that proved to be most valuable. “I met my business partners at MIT and many other people in my network. You want to use this opportunity while you’re at MIT to meet the professors, meet other people that are in your field, because you never know where people will end up.”

Shifting the focus to MIT’s history and its involvement in creating the current ecosystem of entrepreneurship in greater Boston, Hockfield shared that during her tenure as president, she saw a new wave of innovation rising in the region. She knew MIT could foster it, leading the Institute to “participate in accelerating the development of Kendall Square by being a really good partner to the city and to the companies. My role was to pour a little gasoline on the flames.”

Addressing the topic of raising funding, Draper asked the panel whether it was the idea, the product or the user base that was more important.

To Katabi, who has seen a couple of startups out of her lab at MIT, it was your promise to the investor that mattered the most. “People don’t see much at the beginning. Your promise is in the future. So it’s you, it’s the idea and it’s the market. It’s also that the promise once delivered it will make a difference.”

An audience member asked the panel to share what catalyzed their decision to leap into entrepreneurship. Greiner replied that she made the move early on in her career, co-founding iRobot shortly after graduating from MIT. She continued that it took the company 12 years to move into market, calling it “the longest overnight success story you’ve ever seen.” She further explained that her experience developing iRobot had its low points as well when funded projects couldn’t move forward, and that “it’s really not always about the idea, it’s about the timing of the idea, which is just as critical.”

When asked about the challenges of being a woman in technology, Greiner answered, “You have to look at everything for what it is. It can be a double edge sword. Back in 1990, there were even fewer women in technology. That was bad, but on the other hand when I would go meetings, people would remember me as the ‘robot lady.’ Everything’s a double edge sword and if you look at the positive, you keep going forward because we need women in tech.”

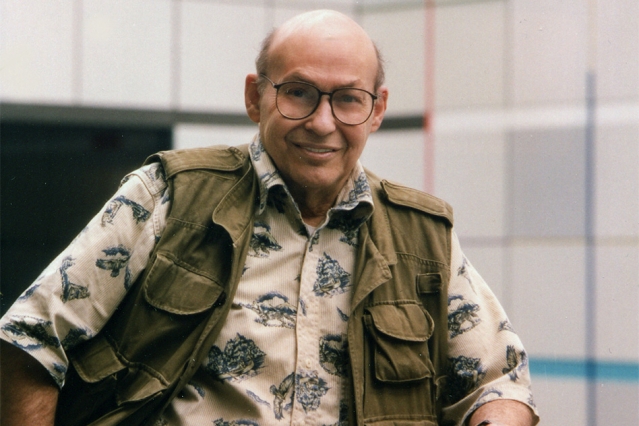

Joi Ito, director of the MIT Media Lab, offered the panel closing remarks on the innovative research happening at the media lab. Ito, who spent much of his career as an entrepreneur and venture capitalist, commented on the complementary roles that academia and startups play in developing new technology.

The difference between the mindset at startups and in the academic world was particularly interesting to Ito, who joined MIT in 2011. Whereas startups must focus on short-term goals and the marketability of their products, academics can spend time thinking about long-term goals and the math and science underpinning new technology, he remarked, and both ways of thinking play a role in deploying new technologies.

“I’ve spent the last five years trying to understand how technology makes it out into the real world,” Ito said. “Having done that I see the importance of translation of technology into the real world, and the role that startups have in that.”

News Image: