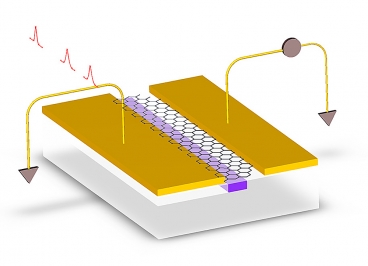

Optoelectronic computer chips that will use light to move data rather than electricity are much closer to reality thanks to the clever application of graphene (on silicon) by researchers from MIT, Columbia University and IBM's T.J. Watson Research Center under the direction of Dirk Englund, the Jamieson Career Development Assistant Professor of Electrical Engineering and Computer Science at MIT. The work, titled "Silicon photonics: Graphene benefits" appears in Nature Photonics, Sept. 15, 2013. Englund, a member of the Research Laboratory of Electronics (RLE) and the Microsystems Technology Laboratories (MTL) also directs the Quantum Photonics Laboratory. [Image: In a new graphene-on-silicon photodetector, electrodes (gold) are deposited, slightly asymmetrically, on either side of a silicon waveguide (purple). The asymmetry causes electrons kicked free by incoming light to escape the layer of graphene (hexagons) as an electrical current. Graphic courtesy of researchers.]

Optoelectronic computer chips that will use light to move data rather than electricity are much closer to reality thanks to the clever application of graphene (on silicon) by researchers from MIT, Columbia University and IBM's T.J. Watson Research Center under the direction of Dirk Englund, the Jamieson Career Development Assistant Professor of Electrical Engineering and Computer Science at MIT. The work, titled "Silicon photonics: Graphene benefits" appears in Nature Photonics, Sept. 15, 2013. Englund, a member of the Research Laboratory of Electronics (RLE) and the Microsystems Technology Laboratories (MTL) also directs the Quantum Photonics Laboratory. [Image: In a new graphene-on-silicon photodetector, electrodes (gold) are deposited, slightly asymmetrically, on either side of a silicon waveguide (purple). The asymmetry causes electrons kicked free by incoming light to escape the layer of graphene (hexagons) as an electrical current. Graphic courtesy of researchers.]

Read more in the Sept. 16, 2013 MIT News Office article by Larry Hardesty titled "Graphene could yield cheaper optical chips - Researchers show that graphene — atom-thick sheets of carbon — could be used in photodetectors, devices that translate optical signals to electrical," also posted below.

Graphene — which consists of atom-thick sheets of carbon atoms arranged hexagonally — is the new wonder material: Flexible, lightweight and incredibly conductive electrically, it’s also the strongest material known to man.

In the latest issue of Nature Photonics, researchers at MIT, Columbia University and IBM’s T. J. Watson Research Center describe a promising new application of graphene, in the photodetectors that would convert optical signals to electrical signals in integrated optoelectronic computer chips. Using light rather than electricity to move data both within and between computer chips could drastically reduce their power consumption and heat production, problems that loom ever larger as chips’ computational capacity increases.

Optoelectronic devices built from graphene could be much simpler in design than those made from other materials. If a method for efficiently depositing layers of graphene — a major area of research in materials science — can be found, it could ultimately lead to optoelectronic chips that are simpler and cheaper to manufacture.

“Another advantage, besides the possibility of making device fabrication simpler, is that the high mobility and ultrahigh carrier-saturation velocity of electrons in graphene makes for very fast detectors and modulators,” says Dirk Englund, the Jamieson Career Development Assistant Professor of Electrical Engineering and Computer Science at MIT, who led the new research.

Graphene is also responsive to a wider range of light frequencies than the materials typically used in photodetectors, so graphene-based optoelectronic chips could conceivably use a broader-band optical signal, enabling them to move data more efficiently. “A two-micron photon just flies straight through a germanium photodetector,” Englund says, “but it is absorbed and leads to measurable current — as we actually show in the paper — in graphene.”

Unbiased account

As Englund explains, the problem with graphene as a photodetector has traditionally been its low responsivity: A sheet of graphene will convert only about 2 percent of the light passing through it into an electrical current. That’s actually quite high for a material only an atom thick, but it’s still too low to be useful.

When light strikes a photoelectric material like germanium or graphene, it kicks electrons orbiting atoms of the material into a higher energy state, where they’re free to flow in an electrical current. If they don’t immediately begin to move, however, they’ll usually drop back down into the lower energy state. So one standard trick for increasing a photodetector’s responsivity is to “bias” it — to apply a voltage across it that causes the electrons to flow before they lose energy.

The problem is that the voltage will inevitably induce a slight background current that adds “noise” to the detector’s readings, making them less reliable. So Englund, his student Ren-Jye Shiue, Columbia’s Xuetao Gan — who, together with Shiue, is lead author on the paper — and their collaborators instead used a photodetector design developed by Fengnian Xia and his colleagues at IBM, which produces a slight bias without the application of a voltage.

In the new design, light enters the detector through a silicon channel — a “waveguide” — etched into the surface of a chip. The layer of graphene is deposited on top of and perpendicular to the waveguide. On either side of the graphene layer is a gold electrode. But the electrodes’ placement is asymmetrical: One of them is closer to the waveguide than the other.

“There’s a mismatch between the energy of electrons in the metal contact and in graphene,” Englund says, “and this creates an electric field near the electrode.” When electrons are kicked up by photons in the waveguide, the electric field pulls them to the electrode, creating a current.

Hot topic

In experiments, the researchers found that, unbiased, their detector would generate 16 milliamps of current for each watt of incoming light. Its detection frequency was 20 gigahertz — already competitive with germanium. (Some experimental germanium photodetectors have achieved higher speeds, but only when biased.) With the application of a slight bias, the detector could get up to 100 milliamps per watt, a responsivity commensurate with that of germanium.

Englund is confident that better engineering — thinner electrodes, or a narrower waveguide — could yield a photodetector whose responsivity is even higher. “It’s a matter of engineering,” he says. “We are already testing some new tricks to get another factor of two or four.”

“I think it’s great work,” says Thomas Mueller, an assistant professor at the Vienna University of Technology’s Photonics Institute. “The main drawback of graphene photodetectors was always their low responsivity. Now they have two orders of magnitude higher responsivity, which is really great.”

“The other thing that I like very much is the integration with a silicon chip,” Mueller adds, “which really shows that, in the end, you’ll be able to integrate graphene into computer chips to realize optical links and things like that.”

In fact, the same issue of Nature Photonics also features a paper by Mueller and colleagues, reporting work very similar to that conducted by Englund and his team. “We did not know that we were doing the same thing,” Mueller says. “But I’m very happy that two papers are coming out in the same journal on the same topic, which shows that it’s an important thing, I think.”

The chief difference between the two groups’ work, Mueller says, is that “we used slightly different geometry.” But, he adds, “Honestly, I think that Dirk’s geometry is more practical. We were also thinking about the same thing, but we didn’t have the technical capabilities to do this. There’s one process that they do that we were not able to do.”

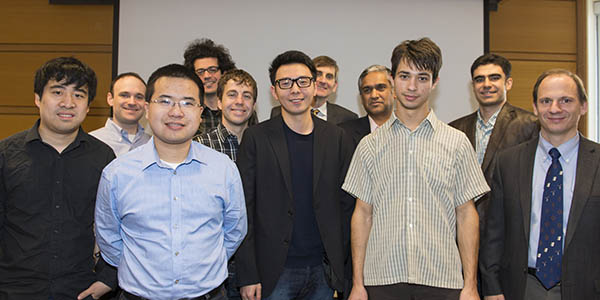

News Image:

In an effort to understand how today's object-recognition systems fall short of their desired goal,

In an effort to understand how today's object-recognition systems fall short of their desired goal,

SuperUROP is more than a ‘warm-up’

SuperUROP is more than a ‘warm-up’ Stata gave a historical perspective on what he termed the two pillars that MIT has built so successfully over the years: education and research. Now, he noted, there is increasing focus by MIT — in a very deliberate way — on innovation and entrepreneurship. The SuperUROP program, Stata said, is contributing to this process.

Stata gave a historical perspective on what he termed the two pillars that MIT has built so successfully over the years: education and research. Now, he noted, there is increasing focus by MIT — in a very deliberate way — on innovation and entrepreneurship. The SuperUROP program, Stata said, is contributing to this process.

EECS Department Head Anantha Chandrakasan announced today the appointment of

EECS Department Head Anantha Chandrakasan announced today the appointment of

Grimson and Schmidt are named for new leadership roles as Chancellor for Academic Advancement and MIT Acting Provost, respectively

Grimson and Schmidt are named for new leadership roles as Chancellor for Academic Advancement and MIT Acting Provost, respectively

EECS Department Head Anantha Chandrakasan announced today the appointment of Professor

EECS Department Head Anantha Chandrakasan announced today the appointment of Professor

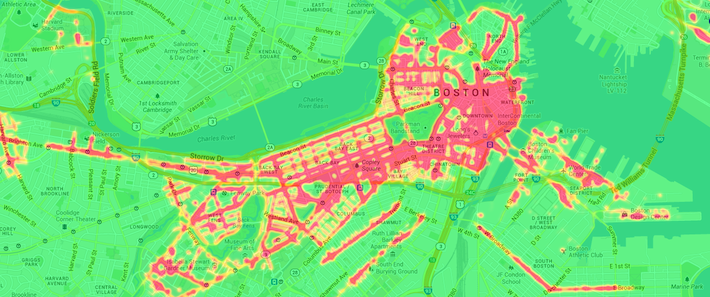

At this month’s IEEE Conference on Intelligent Transport Systems,

At this month’s IEEE Conference on Intelligent Transport Systems,